The Algorithmic Stop and Frisk of Black Communities Brought To You By Palantir

Technocratic Neo-Apartheid Scales White Supremacy Globally and Back Again

Palantir isn’t only tracking what you do; they’re building a profile of who they think you will become. Their software powers ICE, police, intelligence, and child welfare agencies, turning “risk prediction” into a tool for preemptive control.

Introduction

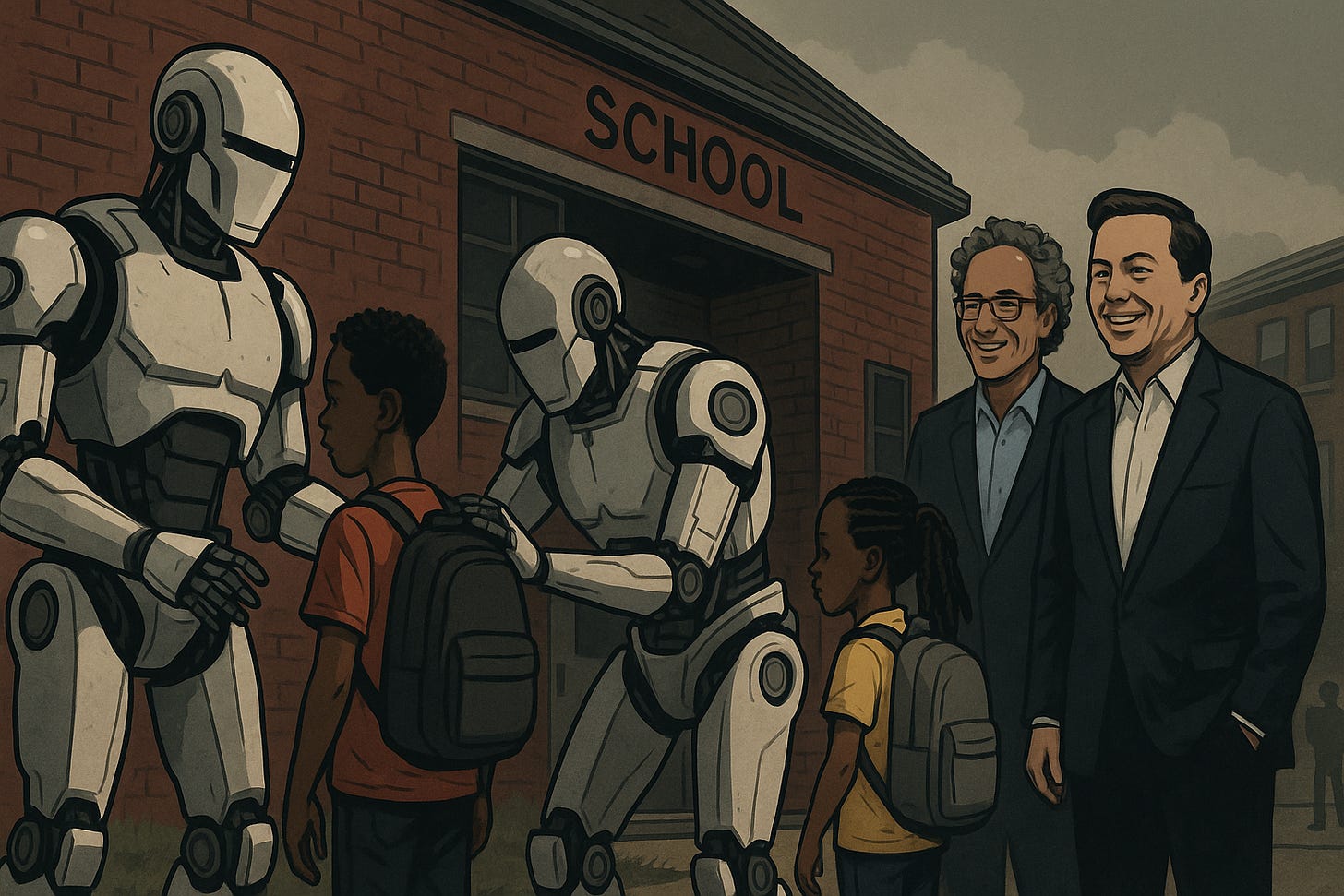

In April 2025, AIM Media House reported how Palantir went from running a secret New Orleans Police Department predictive-policing pilot (2012–2018) to building AI systems for global war. That early project, done without public consent, turned an entire Black-majority city into a live testing ground for predictive policing.

Now Palantir’s technology has grown far beyond that. The same systems first tested on Black neighborhoods are used on battlefields. They are improved under wartime conditions, built into alliance networks like NATO, and sold to partner nations. Once proven abroad, they return to the United States with new powers. These tools link police, immigration enforcement, and social services into a single connected system for control.

For communities that have already been heavily watched, stopped, arrested, and jailed at higher rates, this is not a distant problem. It is the use of decades of racially biased policing data to train AI systems that lock in and expand white supremacist practices—at home and worldwide.

Turning Over-Policing Into Policy by Code

The New Orleans pilot proved two things:

It was possible to merge very different types of data, like arrest records, social media activity, and court files, into risk lists and relationship maps.

Such systems could run for years without public approval, even in cities with long records of racist policing.

These histories are not just old stories. They are active systems. Every stop, ticket, arrest, and field interview entered into a police database becomes a permanent digital record. Over decades, these records pile up, and they are mostly about Black residents. This is not because Black people offend more, but because they are targeted more by police.

These records do not stay in one system. They travel across agencies and even across states, shaping how entire communities are seen by government. Once stored in databases, biased records are treated as facts. AI tools built on them recreate the same patrol maps, enforcement patterns, and suspicion profiles that created the data in the first place.

Palantir’s systems take these records as raw material. They do not correct for the bias in how the data was made. The result is policy by code—old patterns of policing, written into software and applied automatically.

How Palantir’s Expansions Lock in the Threat

Military AI Comes Home

Palantir builds military tools such as the Maven Smart System for NATO and the U.S. Army’s TITAN targeting trucks. These systems collect information from many sources, identify targets quickly, and suggest or carry out action.

The same setup works for domestic policing.

Military technology often comes home. Drones, mapping tools, and counterinsurgency software have followed this path before. If a system can find insurgents abroad, it can find “high-risk” people in U.S. cities.

When redeployed at home, these systems mix local arrest records with federal intelligence and sometimes foreign data. This adds more power to predictive policing models that were already biased from the start.

Consolidating the Enforcement Rail

The Army’s ten billion dollar software deal with Palantir merges dozens of contracts into one long-term agreement. This makes Palantir the default tool for key decision-making.

This setup does two things:

It locks in the vendor. Once agencies invest in training and integration, it is hard and costly to switch.

It locks in the definitions. If Palantir’s software defines a “risk” in a certain way, that definition becomes standard across agencies.

In civilian enforcement, ICE’s “ImmigrationOS” does the same thing. It links immigration systems to health and social service databases. Once integrated, a flag in one area can instantly trigger enforcement in another.

Black Communities as Perpetual Testbeds

Predictive policing focuses on areas with more recorded crime. Those areas are often Black neighborhoods, not because more crime happens there, but because more policing happens there. Palantir’s AI makes this cycle move faster.

Runaway Feedback Loops

A mostly Black neighborhood is marked as a priority patrol zone based on past arrest data. More patrols lead to more stops and tickets for minor things like jaywalking or expired tags. Those new records go back into the system and raise the area’s risk score. The score then calls for even more patrols. The loop repeats endlessly.

Cross-Domain Targeting

A Black single mother applies for public housing. Her application triggers background checks across Palantir-linked systems. A ten-year-old dismissed arrest appears in a municipal file. That file flags her to a state safety unit. The flag moves to immigration enforcement, even though she is a citizen, and then to a federal fusion center. Soon she faces extra police visits, closer benefits checks, and more screening at airports—all because she applied for housing.

Technocratic Neo-Apartheid in Practice

Preemptive Segregation of Opportunity

In the past, bias in jobs, housing, or education happened during the application process, where it could sometimes be seen and challenged. Now, Palantir’s systems assign people a risk score before they apply. This score can block them from jobs, apartments, loans, or licenses without them ever knowing why. Paths are closed before a person can even walk them.

Expansion of the Surveillance Perimeter

Policing used to focus on physical spaces like a block or a street corner. Now the “perimeter” is digital and follows people everywhere. A flag in one database can appear in others, across cities or states. A risk profile made from a traffic stop can show up when you go through airport security, apply for benefits, or enroll your child in school. The net is wider, and once you are in it, you stay in it.

Opacity and Impunity

Palantir’s software is secret. The public, and sometimes even the agencies using it, cannot see how it works. If someone is denied housing or a job because of a risk score, they cannot easily find out why. Agencies hide behind vendor secrecy or national security. This leaves no clear path to challenge unfair decisions.

Technocratic Neo-Colonialism Abroad, Same Shit at Home

Palantir sells itself worldwide as a partner in security, counterterrorism, and border control. It offers governments the ability to combine military, intelligence, and civilian data. Many deals are made in countries with weak oversight, where the systems can be tested under extreme conditions.

Once refined abroad, these systems return to the United States as “proven” solutions. The same predictive models used to spot insurgents in a war zone can be used to label “high-risk” people in American cities.

The flow goes both ways. Data from U.S. policing—especially in Black communities—feeds into Palantir’s global systems. The logic of control is refined overseas and then brought back home with new abilities tested beyond U.S. law.

For Black communities, this means the tools that over-policed them here are now part of a global cycle of surveillance and control.

The Ideological Background

Technology is shaped by the beliefs of its creators. In 2024, Palantir CEO Alex Karp said the United States should make its enemies “wake up scared and go to bed scared.” He called for collective punishment, not just of enemies but of their families and friends, and dismissed international bodies as barriers to what he called “good.”

This is not just talk. It shows that Palantir’s leadership sees fear as a strategy. If fear is the goal, then harm to communities is not a mistake—it is part of the plan. For Black neighborhoods in the United States, already seen as problem zones by police, this mindset supports even harsher enforcement.

Technocratic Neo-Apartheid and Technocratic Neo-Colonialism are not side effects of technology. They are the products of an ideology that values control over fairness. Palantir’s systems are built with that logic inside them.

Risks and Harms

Bias Amplification

Policing data is already biased, and research proves it. Any system built on that data will carry the bias forward. Palantir’s systems make the bias permanent.

Cross-Domain Retaliation

A single mistake in one database can lead to punishment across many others. A wrong entry in a police file can move into immigration, housing, jobs, and benefits systems. People can be targeted without a trial and without a chance to defend themselves.

Locked-In Governance

When an agency starts using Palantir’s systems, it becomes hard to leave. The way Palantir defines “risk” becomes the standard everywhere it is used. Even when harm is clear, change is slow and costly.

Conclusion

Military technology rarely stays overseas. The same AI that tracks insurgents abroad now profiles people at home. The same risk models that shape NATO targeting shape patrol routes in Black neighborhoods.

This is algorithmic stop and frisk at a global level, refined abroad, built into allied systems, and brought back to strengthen Technocratic Neo-Apartheid in the United States.

The New Orleans pilot was the start. Today, that testbed is part of a worldwide control rail. And it still runs straight through the heart of America’s most targeted communities.